1. PG’s project

In How to Do Philosophy, Paul Graham claims that “judging from their works, most philosophers up to the present have been wasting their time.” His point is that philosophy per se is structurally flawed. How?

The trouble began with Aristotle: “he sets as his goal in the Metaphysics the exploration of knowledge that has no practical use. Which means no alarms go off when he takes on grand but vaguely understood questions and ends up getting lost in a sea of words.” In other words, because philosophy aims at the most general truths without worrying about how useful they are, it’s able to get away with nonsense. Other fields, by contrast, only seek ideas with some practical value—ideas that make it easier to do something useful; if they arrive at general truths, it’s only because abstraction usually works: it buys you simpler theories and makes your algorithms faster.

So whatever “philosophy” we’re after, PG says, we should get from able specialist non-philosophers who work their way up chains of abstraction until what they know is general enough to apply elsewhere. That way we guarantee (a) that it’s useful, since specialist non-philosophers favor the more useful of two rivals, ceteris paribus, and (b) that it’s easy to understand, since specialist non-philosophers don’t have time for difficult texts (because they are less useful, in general, than easy ones).

2. Something’s rotten in the state of Denmark

The simplest flaw in PG’s account is its scope: it implicates most philosophers up to the present, which means all one has to do to rebut it is point to a few groups of contemporary philosophers who systematically aren’t wasting their time. I take up that task in the next two sections.

This is not to elide the indirect influence, however, of even those thinkers—Kant, for instance—most susceptible to PG’s attack. That influence, I’ll argue in section 5, should give their critics pause.

Finally, I will defend the value of “doing philosophy”—an argument that I do not think is “like someone in 1500 looking at the lack of results achieved by alchemy and saying its value was as a process,” however similar it may sound.

3. Don’t mind us philosophers… of mind

What would we lose if, of the 18,460 papers at MindPapers, a “bibliography of the philosophy of mind and the science of consciousness,” we removed the 12,500 or so written by philosophers? Let’s take a look at the top 100 most cited works for an idea.

The first to go would be Jerry Fodor’s 1983 The Modularity of Mind, in which he revived an idea that phrenologists once took for granted, albeit in a perverted form: “that many fundamentally different kinds of psychological mechanisms must be postulated in order to explain the facts of mental life.” He argues, in other words, that for our mind to work the way it does there must be vertically integrated components (modules) in our brain—like the “universal grammar” faculty proposed by Chomsky. Fodor’s paper cleared up a lot of conceptual misunderstandings, generated testable predictions, and set the stage for decades of cognitive science that has (largely) borne out his view.

We would also lose John Searle’s 1980 Minds, brains, and programs, which gave a negative answer to Turing’s question, “Can machines think?” As support he introduced his famous Chinese Room thought experiment, which apparently defeats a functional account of intelligence by demonstrating that if understanding Chinese were an algorithm merely “run” on one’s brain, then it could be run in similar fashion by a man in a room shuffling about slips of paper; would we then say that the man, or for that matter the room, understands Chinese? If not, then machines can never understand the way that we do—there is something special (he called it “causal power”) about our brains.

Searle’s paper is important because if he’s right then a whole lot of AI researchers trying to make computer programs with “general intelligence” ought to move on to something else. If wrong, then we ought to figure out how he’s wrong—because that will be the secret to making machines think the way we do.

We would lose Thomas Nagel’s 1974 exploration and articulation of phenomenal experience, What is it like to be a bat?; Daniel Dennett’s The Intentional Stance, and all its elegant abstractions; Andy Clark’s (and Rodney Brooks’s) idea that our minds are fundamentally embedded in the world, and that our inner model of our environment is far sparser than once thought—articulated recently in Being There: Putting Brain, Body, and World Together Again; etc.

What’s more, we would lose thousands of articles that clear the ground for, inform, or integrate findings across the brain sciences. Ned Block’s influential review, Philosophical issues about consciousness, is a nice recent example.

Perhaps the scariest omission would be the work of one of my intellectual heroes, Douglas Hofstadter. The little-known computer programs produced under his watch by FARG at Indiana—Metacat or Letter Spirit—could stay, and so could his 1976 “Energy levels and wave functions of Bloch electrons in rational and irrational magnetic fields,” but Godel, Escher, Bach: An Eternal Golden Braid would have to go: it is too much a search for the most general truths, not enough a work of original, concrete research; there is too much unconstrained philosophizing in there.

If that idea seems troublesome, to keep only some of Hofstadter’s work while throwing out the rest (the far more influential stuff, no less), then you have probably begun to appreciate the special relationship between contemporary philosophy of mind and “hard” cognitive science—they are of one mind, sometimes literally. Divorcing the two would probably hurt them both.

What language game?

PG argues that philosophy is, at bottom, a “language-game” in the Wittgensteinian sense. Here’s how he puts it:

There are things I know I learned from studying philosophy. The most dramatic I learned immediately, in the first semester of freshman year, in a class taught by Sydney Shoemaker. I learned that I don’t exist. I am (and you are) a collection of cells that lurches around driven by various forces, and calls itself I. But there’s no central, indivisible thing that your identity goes with. You could conceivably lose half your brain and live. Which means your brain could conceivably be split into two halves and each transplanted into different bodies. Imagine waking up after such an operation. You have to imagine being two people.

The real lesson here is that the concepts we use in everyday life are fuzzy, and break down if pushed too hard.

This is why, apparently, philosophy ends up looking like a “word salad,” like a “garbled message”—“hard to understand because the writer was unclear in his own mind” and not “because the ideas it represents are hard to understand.”

My response is twofold. For one, the split brain example he gives is, at bottom, about the word “I.” Fine. But once you know what the word “I” actually means, you have not just learned that your everyday concept was fuzzy, that it breaks down when you push it too hard—you’ve developed a richer model of the self, (hopefully) including all of the complicated neural, computational, and functional details.

Thus a petty semantic question—what is the meaning of the word “I”?—can open up a deeper line of inquiry: how do we form an integrated sense of self, why do we even have a word like “I,” when our brain is apparently just a soup of meaningless symbols? That is a question that, once articulated properly, can be explored by philosophers and scientists alike. (See Hofstadter’s “Who Shoves Whom Around Inside the Careenium?, or, The Meaning of the Word ‘I’” for one such exploration.)

This kind of thing happens all over the place. Philosophical debates about “metaphysical necessity” sure sound like wasteful bickering over the names of things, until a murder trial turns on whether someone “could have possibly acted differently”; ethicists may seem like they're in the clouds, except when one of their moral frameworks gets written into a country’s constitution; Hilary Putnam’s famous paper, The Meaning of “Meaning”, feels hopelessly out of touch with reality—we all get along just fine without knowing the first thing about “intension” or “extension”—but it raises questions that figure deeply in language learning and AI. And so on.

It’s easy to say that philosophy is one big conversation about the color of the bikeshed. What that misses, though, is that the right question asked in the right way can cause a paradigm shift (a term due, incidentally, to a philosopher):

- “What is the meaning of the word ‘I’?”

- “How can someone ever cross a room?”

- “Can machines think?”

- “Are meanings in the head?”

- “Is the mind organized horizontally or vertically?”

- “What constitutes moral responsibility?”

- “Are mathematical objects real?”

- “Is the mind a blank slate?”

- “Do macroscopic objects actually exist?”

- “Is the accused to be presumed innocent, or guilty?”

That last one brings me to a brief aside: nearly every legal opinion I’ve ever read had some linguistic issue at its core. Example: should the blotter paper used to drop acid be counted in its weight, which determines the length of mandatory sentences? The argument comes down to the phrase “mixture or substance containing a detectable amount” (see Chapman v. United States, 500 U.S. 453). Point being, wresting imprecise words out of their natural context and plugging them into a perverted formalism is the law as we know it—it’s practically the whole game. We don’t attack it, though, because we know that their “language-games” help to draw increasingly fine lines around difficult ideas—to, as it were, measure the coast of Britain. I think the same should be said of philosophy.

My second objection to PG’s “word salad” comment is more straightforward: most contemporary philosophers of mind do write well. So well, in fact, that I think one can become a better writer just by reading them. They know their Strunk & White and most would probably appreciate PG’s aesthetic. See Eric Lormand’s But Momma Never Told Me about Philosophy Papers for evidence (or just look at any of the papers mentioned above).

4. Problematizing a purely syntagmatic critique of postmodern critical theory

PG’s biggest enemy here, besides the ancient philosophers, seems to be postmodern critical theorists. Example:

The field of philosophy is still shaken from the fright Wittgenstein gave it. [13] Later in life he spent a lot of time talking about how words worked. Since that seems to be allowed, that’s what a lot of philosophers do now. Meanwhile, sensing a vacuum in the metaphysical speculation department, the people who used to do literary criticism have been edging Kantward, under new names like “literary theory,” “critical theory,” and when they’re feeling ambitious, plain “theory.” The writing is the familiar word salad:

Gender is not like some of the other grammatical modes which express precisely a mode of conception without any reality that corresponds to the conceptual mode, and consequently do not express precisely something in reality by which the intellect could be moved to conceive a thing the way it does, even where that motive is not something in the thing as such. [14]

Elsewhere, on his Quotes page, we find another jab:

“Simultaneously reifying and challenging hegemonic codes of race, class, gender and regional or national identity, his characters explore the complex and changing postmodern cultural landscape.”

Robert Bennett, English professor at Montana State, announcing a panel discussion about Brad Pitt

To a certain extent I’m on board. Bennett’s sentence is incredibly vague (though I imagine part of that is just being in an introduction). And I find a lot of the writing done by postmodern critical theorists to be obfuscatory bullshit. This is not to say, however, that all of it is. Nor that if someone writes like an idiot then what they’re saying must be fundamentally unimportant in the same way that Kant’s musings may have been fundamentally unimportant (more on that later).

I can’t help but think that PG is taking a line that a lot of people take, which is to laugh at how these lefty hipster professors put on such a show for something as trivial as Brad Pitt. The idea is that something like “Every connected, locally compact, non trivial group has a non trivial, closed, invariant subgroup” from a math exam is completely unilluminating, but at least we go in expecting not to understand anything; we assume the stuff is way beyond us. But with cultural theory, postmodern theory, etc., the lay reader expects to be able to talk about things like “culture,” “literature,” and “Brad Pitt” at a pretty high level. So when they see technical phraseology—“complexly situation within”, “mutual historicization of”, “theorizing the subject”, etc.—they can’t help but feel hoodwinked, as if something that’s supposed to be understandable has been made needlessly complex.

But if we take the operations of language and culture to be as complicated as any other object of study, and if we allow that certain professionals within the field want to use shared tokens (e.g. “historicization”) to collapse complex ideas that show up all over their literature, then we can begin to understand why this stuff sounds the way it does. They’re not writing for every Joe Intellectual, just for the people who put the time in to be part of their club.

Compare, for the sake of illustration, two definitions of Stokes’ Theorem. One is for a more general audience, like Calc II or first-year physics students:

The curl theorem states

The other, of which the first is just a special case, is for mathematicians:

Let M be an oriented smooth manifold of dimension n and let α be an n-differential form that is compactly supported on M. The integral of α over M is defined as follows: Let {f_i} be a partition of unity associated with a locally finite cover {U_i} of (consistently oriented) coordinate neighborhoods...

The analogy back to critical theory should be apparent. On one level you have concrete, special cases with correspondingly concrete writing. This seems to be the level that PG would be interested in, if any. To get access to it you can look at magazine covers critically, or remark on the homosexual tension in Fight Club, or read something like Introducing Postmodernism or this incisive essay on television and U.S. fiction by David Foster Wallace, who intentionally eschews the standard vocabulary.

On another level is the “deeper” theory or more general framework. A conversation on this level, if you’re an outsider, sounds like two people talking about Starcraft if you’ve never played. But there is more content here, too, just as there’s more content in the second formulation above. Perhaps the ratio between the two levels is tighter in critical theory, but I suspect it’s far greater than PG thinks.

I say that because I also had strong misgivings about the subject—for its insuperable tomes, hipster followers, and radical political agenda—before finally easing into it via writers like Richard Dyer, Patsy Yeager, Simon Gikandi, and Fredric Jameson. I took a class on culture theory with a professor who found herself “increasingly irritated by the French philosophers,” and whose reading selections were recent and down-to-earth; Dani Cavallaro’s Critical and Cultural Theory was a fine textbook. After a bit of training, sentences that I once found unreadable (e.g. Foucault’s or Barthes’s) became just as crisp and insightful as PG’s best. I had only to learn the language.

I’m reminded of how chess experts can recall legal board positions with far greater fidelity than someone who doesn’t play, but perform about equally when the pieces are arranged in ways they’d never see in a real game. The difference, of course, is that a legal board position means a lot more to the expert than to the lay person—he can consider strategic features like “pawn strength” and “control of the center,” and think of scenarios (“Sicilian opening with a queen’s gambit”) that he’s encountered before—whereas a jumble of pieces reduces them both to rote memorization. My point is that if a work of critical theory looks like a “garbled message,” it could be that it really is garbled (e.g. Derrida), or it could be that one simply hasn’t read enough of the stuff to know.

Good ideas

I fear that I’ve spent too much of my defense on the language of critical theory and not enough on the actual ideas. But I think that PG attacks these guys because of the language, and could—for all I know—not give a hoot about what they’re actually saying. In case that’s happened, here are some of their good ideas off the top of my head:

All language is political; capitalism-as-market-system is tremendously good, but capitalism-as-defining-roles-in-terms-of-production is dangerous; there is a causal connection between Cosmopolitan and increased labioplasty among 14 year-olds; the simulacrum; the panopticon; art once made the strange familiar, and now it makes the familiar strange; gays appropriated “queer” and largely defused it—could “nigger” be defused, and how?; television uses self-aware irony to swallow attacks leveled against it; the medium is the message; photography is a special kind of representation because it feels objective; if you don’t think Aladdin is racist, then your working definition of “racist” is inadequate; etc.

These are the kind of ideas that satisfy PG’s criterion: they “would cause someone who understood them to do something differently.”

Interlude: PG and brevity

I think it’s worth commenting on PG’s apparent preoccupation with brevity. See, for example, his handle (pg), the URLs on paulgraham.com, his comments on writing, and these excerpts from a few of his essays:

One thing hackers like is brevity. Hackers are lazy, in the same way that mathematicians and modernist architects are lazy: they hate anything extraneous.

and

[on developing Arc:] I didn’t decide what problems to work on based on how hard they were. Instead I used what might seem a rather mundane test: I worked on things that would make programs shorter.

Why would I do that? Because making programs short is what high level languages are for. It may not be 100% accurate to say the power of a programming language is in inverse proportion to the length of programs written in it, but it’s damned close.

I bring it up because I think he may occasionally make sacrifices in the service of his aesthetic. He may elide the complexities of an argument—saying “most philosophers” instead of singling out specific people or schools of thought—to make sure an essay reads well. Or he may ignore what you’re saying if you say it poorly, like if you’re a critical theorist.

The curious thing about PG’s writing is that every sentence sounds… not dispassionate, but not emphatic, either. There are no vicissitudes of character, there is no modulating voice that rises with the important bits and falls with the bits it’s not so sure about. There is just a monotone that declares, sentence after sentence, the self-evident truth.

What that means is that something like

Books on philosophy per se are either highly technical stuff that doesn’t matter much, or vague concatenations of abstractions their own authors didn’t fully understand (e.g. Hegel).

sounds just as vanilla, to the point, and obviously “the way it is” as this:

Embedded languages (or as they now seem to be called, DSLs) are the essence of Lisp hacking.

We’re tempted to take his word on the one because we respect his authority on the other, just as Apple fans gawked at the iPhone no less than they did the iPod—because Steve Jobs had the same magical pitch each time.

5. A web of knowledge and a pretty picture

It took several centuries to prove the unsolvability of the quintic by radicals—a result due to Evariste Galois when he was just nineteen. Everyone before him was wrong, and of those, most were hopelessly wrong: they worked in the wrong direction, trying to devise a “quintic formula” instead of proving one couldn’t exist.

Were all of these people wasting their time? To say so would vastly oversimplify history.

I’m reminded of the following account of creativity (Koestler 1964, cited here):

The moment of truth, the sudden emergence of a new insight, is an act of intuition. Such intuitions give the appearance of miraculous flashes, or short-circuits of reasoning. In fact they may be likened to an immersed chain, of which only the beginning and the end are visible above the surface of consciousness. The diver vanishes at one end of the chain and comes up at the other end, guided by invisible links.

More concretely,

There is a tendency to completely overlook the power of brain algorithms, because they are invisible to introspection. It took a long time historically for people to realize that there was such a thing as a cognitive algorithm that could underlie thinking. (link)

I think the description serves equally well for innovation, where lots of unseen activity occasionally generates a good idea. Our tendency, of course, is to focus on the ends—that is usually all that we can see. Think of Marconi getting credit for the radio, or, on the other side, “the strong ‘first-mover’ effect under which the first papers in a field will, essentially regardless of content, receive citations at a rate enormously higher than papers published later” (arXiv:0809.0522v1).

This is not to advocate for some kind of “butterfly effect” whereby we should recognize everyone’s mysterious role in a delicate chain of influence. But there is a chain of influence, and we should be careful not to ignore large swaths of it.

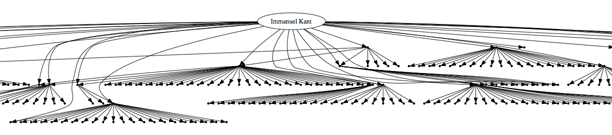

That includes all those people who were wrong, or whose “programs” were fundamentally misguided—because I don’t think we could confidently say we’d be better off without them. See, for example, Immanuel Kant, a philosopher who on PG’s account basically wasted his time, but whose influence extends by way of second- and third-degree connections through all kinds of treasured scientists, sociologists, philosophers, authors, painters, etc.:

The image shows three levels of “Influenced” infobox links from Wikipedia; click for full version [pdf]. (Download source.)

Would it really be fair to say he “wasted his time”? Or did his work set the stage—even if only as a guide to what not to do—for the kind of “miraculous flashes” we now take for granted?

6. Philosophy is my favorite class

From a footnote to PG’s essay:

Some introductions to philosophy now take the line that philosophy is worth studying as a process rather than for any particular truths you’ll learn. The philosophers whose works they cover would be rolling in their graves at that. They hoped they were doing more than serving as examples of how to argue: they hoped they were getting results. Most were wrong, but it doesn’t seem an impossible hope.

This argument seems to me like someone in 1500 looking at the lack of results achieved by alchemy and saying its value was as a process. No, they were going about it wrong. It turns out it is possible to transmute lead into gold (though not economically at current energy prices), but the route to that knowledge was to backtrack and try another approach.

If everything you learned in philosophy class was useless, and other classes, in addition to teaching you valuable truths, also used the same “process” as philosophy, then it’d probably be Pareto optimal to jump ship. But I don’t think that’s the case.

For one, there are plenty of valuable truths to be learned in philosophy—that has been the burden of the rest of this essay, and I hope we can dispense with it now.

But I’d also argue that (good) philosophy classes are special:

Philosophy professors are very smart. Say what you will about the material, but it tends to attract sharp thinkers. On its own that wouldn’t mean much, except that…

There’s a lot of action in a philosophy class. My smartest professors have been math and computer science guys, but I only know that by reading about them—there is unfortunately not a lot of back-and-forth in math class, even though there could be. In philosophy, though, that’s all there is: someone presents an objection to the reading, and—if nobody else will—the professor chips away. There is tons of dialogue, and not the kind of noise that you find in a lot of English classes: in philosophy, as in math, there is a wrong answer (or at least a poorly argued one).

It’s hard to argue well. You learn a lot of the same stuff in philosophy as you do in a proof-based math class, which basically comes down to:

- how to solve hard problems

- break it down,

- work through examples,

- think extremes,

- etc.

- how to express your solution (writing proofs, mostly)

Trouble is, because there is so little talking in math classes, most of this stuff happens on your own in a room somewhere; you don’t have to construct or destroy an argument on the spot. If nothing else, a few hours of good philosophy talk among people much smarter than you teaches you that it’s a lot harder than it looks to (a) defeat even the dumbest-sounding arguments, (b) construct a solid argument of your own, and (c) respond to attacks, all in real-time.

Philosophy papers != English papers. In my experience you can write your way out of an English paper, which is to say, you can think (somewhat) poorly, write well, and get an A. Not so in philosophy. I think this has to do with literary analysis in general; see PG’s essay on essays.

Good philosophy articles are like squash videos. Squash is one of those sports where, if you’re at my level at least, just watching professionals play can noticeably improve your game. The same seems to be true of good philosophy articles. (And it might be true of good math articles, but most mortals aren’t able to read those until grad school; but that’s another essay…)

Of course, this could all have to do with the special mix of classes that I’ve taken. But I have found that contemporary analytic philosophy offers excellent intellectual training.

7. Conclusion

There has been a lot of “bad” philosophy… maybe even most of it. But the subject came clean on that point a long time ago (say, the 1960s) and has been recovering since.

I’ve tried to give a picture of what I take to be the good stuff, and I can only hope that it is not offensively incomplete.

Read the discussion on Hacker News

Read the discussion on Hacker News